Lossy Compression using Neural Networks

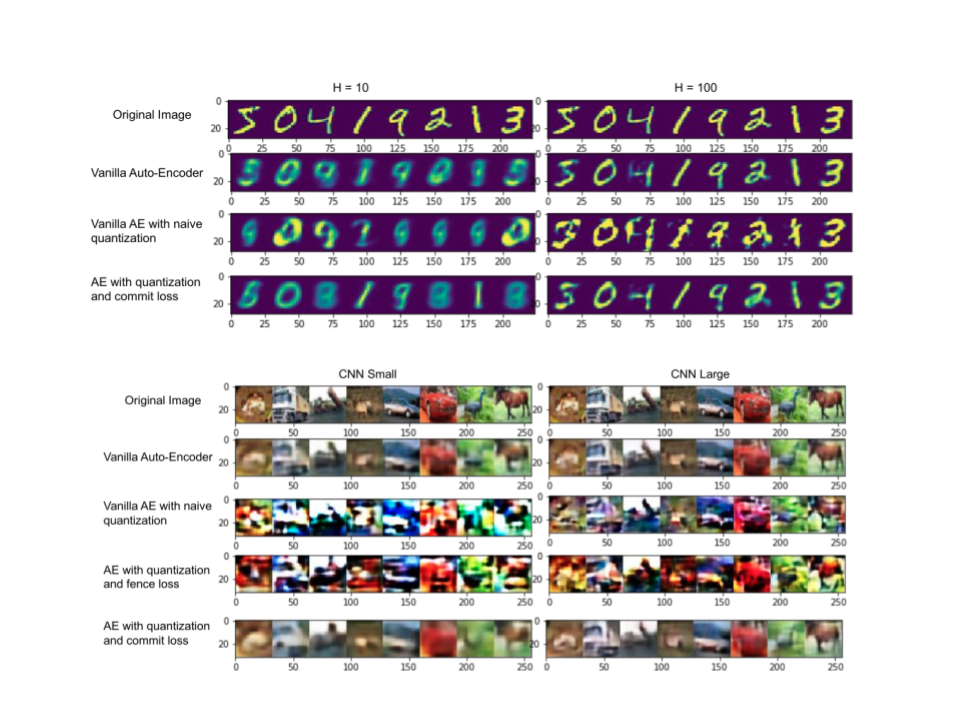

We used autoencoder models with discrete latent space representations to perform lossy compression of image and text based data without significant performance implicates with respect to continuous couterparts like Variational Autoencoders. To improve the compression ratio we tried a naive quantization technique followed by incorporating the quantization objective into a loss function to make the output closer to quantized numbers. Finally, we also tried a training time quantization technique and performed extensive experiments on different image and text based datasets.